What is BERT—Do I Need to Change My SEO Strategy?

This post was selected as one of the top digital marketing articles of the week by UpCity, a B2B ratings and review company for digital marketing agencies and other marketing service providers.

2019 saw many Google Search core algorithm updates and changes making huge waves in the digital marketing landscape. The largest of Google’s 2019 updates was the Oct 21st BERT algorithm update that affected more than 10% of all searches. Google lauded it as the biggest leap forward in the past five years. But what changed? And how does that affect you, the businesses and marketers of the world?

The Beginnings

Keywords and searches go hand-in-hand. This relationship has existed since the beginning of search. Because of this relationship, our search language has taken a shape of it’s own as users ‘get a feel’ for what phrases and terminology will actually deliver the correct answers. BERT, as an extension of Natural Language Processing (NLP), helps make our searches more similar to our everyday conversations by utilizing technology to understand intent.

“No matter what you’re looking for, or what language you speak, we hope you’re able to let go of some of your keyword-ese and search in a way that feels natural for you.”

– Google’s blog regarding the BERT update.

It’s a search engine’s job to figure out what we’re searching for and give us helpful information from the web regardless of how we formulate our search. Where searches have sometimes been strings of words that we assume Google understands; BERT has evolved search understanding to reflect how we naturally ask questions.

Let’s dive more into the machinations of what makes the BERT update so important to users and digital marketers. We’ll also look at what changes (if any) you will need to make for your SEO marketing campaigns.

What is BERT?

First and foremost, BERT stands for Bidirectional Encoder Representations from Transformers. But what does that actually mean to the average marketer? As I searched how to best answer this question I found a great blog post from Wordstream:

BERT is a neural network-based technique for natural language processing pre-training. To break that down in human-speak: “neural network” means “pattern recognition.” Natural Language Processing (NLP) means “a system that helps computers understand how human beings communicate.” So, if we combine the two, BERT is a system by which Google’s algorithm uses pattern recognition to better understand how human beings communicate so that it can return more relevant results for users.

I feel like this is a concise answer to the question “What is BERT?” and couldn’t say it better than this.

With BERT models now applied to search queries we’ve seen a lot of fluctuations in the rankings. Google stated it impacted 10% of their searches. That is an enormous number.

Why was BERT needed?

As we all know there are different levels of searchers. Some people really know how to search the right way and how to formulate a query and others have a more basic knowledge. Because of this diverse ability, it’s easy for search engines to misunderstand a search query and not deliver the best results.

“..[Google has] built ways to return results for queries we can’t anticipate.”

– Pandu Nayak Google Fellow and Vice President, Search

To answer why we all actually need BERT, we need to better understand what a search entails. When users define a search they ask questions to a repository of information and get served an answer to the best of the search engine’s ability. Users search to learn, and at their core, search engines try to understand language. The nuances of this language have proved difficult for machines to understand historically and BERT helped take a leap forward. With this update search engines can now assess the way words in a search query relate to each other.

How does BERT work?

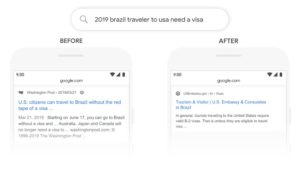

This is a very loaded question that honestly takes a lot of research to understand. You must sort through a lot of new tech terminology if you aren’t familiar with the history of how NLP works. Essentially, BERT does a better job at understanding context of language and intent of searchers with this enhanced contextual knowledge. It uses a pre-trained model with bidirectional understanding to analyze the meaning of words and how they relate to each other. Google’s blog gives us an example of this search query to give us insights on how BERT works:

Prior to the Oct BERT update Google search was unable to understand that the searcher here was Brazilian, looking to come to the USA and wanted to know if they needed a visa. Now with BERT, the search algorithm understands that these terms when used together, are related and the intent is apparent.

If you want to dive into the details of what makes BERT tick, check out the initial paper release in 2018 about the opensource technology used – BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

Should I change anything after because of this update?

Google lays it out very simply and lets us know that if we have been compliant with what they quantify as quality content and website building that we should be just fine from their updates.

— Danny Sullivan (@dannysullivan) October 28, 2019

We here at Boostability take into account these algorithm updates and make sure that we are always compliant with what Google and your clients consider important. Our clients have not seen a drop in rankings because of an algorithm update since 2013. To see how your website ranks, test it out using our free website submission tool!