How Often Is Google Crawling My Website?

As a small business owner or marketer, you know how important it is for potential customers to find you online. You’ve built a website, added your services, maybe even started a blog. But have you ever wondered how Google actually finds your website among the billions out there? Or why sometimes your new updates don’t seem to appear in search results right away?

The answer lies in understanding a fundamental process called Google Crawling. Getting a grasp of this concept is the first step towards improving your website’s visibility and ensuring your efforts are actually getting noticed by the world’s largest search engine.

What Exactly is Google Crawling?

Think of the internet as a vast, constantly growing library. Google Search acts like a meticulous librarian, aiming to index every book (or webpage) available. To do this, it uses automated programs often called ‘bots,’ ‘spiders,’ or specifically, Googlebot.

These aren’t tiny robots; they are sophisticated pieces of code that navigate the web by following links from page to page. This process of discovering and reading website content is Google Crawling. The purpose? To gather information about new and updated pages to add to Google’s massive digital library, known as the index – a staggering collection containing hundreds of billions of web pages and consuming over 100 million gigabytes of storage! It’s from this index that Google pulls results when someone performs a search. As of 2025, Google’s search index is estimated to contain approximately 400 billion documents. In terms of storage, the index exceeds 100 million gigabytes (100 petabytes), highlighting the vast amount of information Google processes to deliver search results.

This extensive index enables Google to handle over 13.6 billion searches per day, amounting to nearly 5 trillion searches annually.

These automated explorers read the code of your website, analyzing content, images, and structure to understand what each page is about. It’s a massive, ongoing task!

Why is Google Crawling Crucial for Your Business?

Simply put: If Google doesn’t crawl your site, it won’t know your site exists or that you’ve updated it.

For your business website to appear in Google Search Engine Results Pages (SERPs) when potential customers search for your products or services, Google needs to have crawled and indexed your pages.

- Visibility: Regular crawling ensures Google has the latest information about your business, offerings, and content.

- Ranking Potential: Without being crawled and indexed, your pages have virtually no chance of ranking in search results.

- Trust & Authority: Frequent Google Crawl activity, especially when it finds fresh, high-quality content, signals to Google that your site is active, relevant, and trustworthy. This positively influences your site’s standing over time.

Therefore, encouraging Google to crawl your site efficiently and regularly is fundamental to any successful online marketing strategy.

(Note: While generally you want pages crawled, there might be specific pages like internal admin logins or thank-you pages you might wish to exclude. This is managed using a file called robots.txt – a topic for another day, but good to be aware of!)

How Often Does Google Crawl a Website?

This is a common question, and the answer is: it varies. Google doesn’t crawl every website with the same frequency. Several factors influence how often Googlebot visits your site:

- Update Frequency: Websites that are updated frequently with new blog posts, products, or pages are typically crawled more often – sometimes multiple times a day for very active sites. If your site rarely changes, Google might only crawl it every few days or even weeks.

- Website Authority & Popularity: Well-established websites with many high-quality backlinks tend to be crawled more often.

- Website Health: Technical issues like server errors or slow loading times can hinder crawling. If Googlebot encounters problems accessing your site, it might reduce crawl frequency.

- Crawl Budget: Google allocates finite resources (crawl budget) to crawling the web. It prioritizes crawling healthy, popular, and frequently updated sites more efficiently.

There’s no magic number, but the key takeaway is that an active, healthy, and well-structured website encourages more frequent Google Crawls.

How to Check Your Website’s Crawl Activity

Curious about how often Googlebot is visiting your site? Google provides this information directly within Google Search Console (GSC), an essential free tool for monitoring your site’s performance in Google Search.

Here’s how to find your Crawl Stats report:

- Log into Google Search Console: If you haven’t set it up yet, doing so should be a top priority.

- Navigate to Settings: Find ‘Settings’ near the bottom of the left-hand menu.

- Open Crawl Stats: Under the ‘Crawling’ section, click ‘Open Report’.

This report shows you data from the past 90 days, including the total number of crawl requests, total download size, average response time, and any availability issues Google encountered. Reviewing this can reveal if Google is having trouble accessing your site.

(Important Note: Google primarily uses its mobile crawler now due to mobile-first indexing. The Crawl Stats report will indicate which Googlebot type (Smartphone or Desktop) is mainly crawling your site. Ensuring your site works perfectly on mobile devices is critical.)

How to Encourage More Frequent Google Crawls

While you can’t force Google to crawl your site on demand (most of the time), you can definitely implement strategies to make your site more attractive and accessible to Googlebot. Here’s how:

1. Ensure Your Site is Technically Sound

Use Google Search Console to identify and fix crawl errors (like server errors ‘5xx’ or ‘not found’ ‘404’ errors) and usability issues (especially mobile usability). A technically healthy site is easier for Google to crawl.

2. Review Your Robots.txt File

Double-check this file (usually found at yourdomain.com/robots.txt) to ensure you aren’t accidentally blocking Googlebot from important pages or sections of your site.

3. Build High-Quality Backlinks

When other reputable websites link to yours (backlinks), it signals authority to Google. Think of it as a vote of confidence. Pursue links from relevant industry sites, local directories, or partners. Even a few good links are better than many low-quality ones.

4. Regularly Update and Add Content

This is crucial! Consistently adding valuable content (blog posts, case studies, new service pages) gives Google a reason to come back and crawl. Focus on demonstrating your E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness). Share your industry knowledge, showcase customer successes, and keep your existing content fresh and accurate. Mix formats: use text, images, videos, and perhaps infographics.

5. Submit an XML Sitemap

A sitemap is a file listing the important pages on your website, acting like a roadmap for search engines. While it doesn’t guarantee crawling or indexing, submitting an up-to-date sitemap via Google Search Console helps Google understand your site structure and discover your content more efficiently.

6. Use Internal Linking Strategically

Link relevant pages within your own website together. For example, link from a blog post about a service back to your main service page. This helps Google understand the relationship between your pages and allows crawl spiders to navigate your site more easily.

7. Optimize On-Page Elements

Ensure your pages have clear, descriptive title tags, well-written meta descriptions (which act as ‘ad copy’ in search results), logical URL structures, and fast loading speeds (Core Web Vitals). These improve both user experience and crawlability.

8. Promote Your Content

Share your new content on social media, in email newsletters, or through industry outreach. More visibility can lead to faster discovery by Google.

How to Request Indexing for Urgent Updates

Made a crucial update or launched a vital new page you want Google to see quickly? While regular crawling happens automatically, Google Search Console offers a way to request indexing for a specific URL:

- Sign into Google Search Console.

- Use the URL Inspection Tool: Paste the full URL of the page you want indexed into the search bar at the top.

- Test Live URL (Optional but Recommended): Click this to ensure Google can currently access the page without issues.

- Click “Request Indexing”: This submits your URL to a priority crawl queue.

This doesn’t guarantee instant indexing, but it tells Google this specific page is ready to be looked at, often speeding up the discovery process compared to waiting for a regular crawl. Remember, submitting the same URL multiple times won’t accelerate it further.

How To Know If Google Has Indexed Your Pages

It’s helpful to check if Google has actually indexed specific pages, especially after updates or submitting requests. Here are two primary methods:

1. Using The Google Search Bar (site: command)

This offers a quick check directly in Google search:

- Go to the Google search bar.

- Type site:yourdomain.com (replace yourdomain.com with your actual domain name).

- The results displayed are the pages from your site that Google currently has in its index.

- To check a specific page: Type site:yourdomain.com/your-specific-page-url (replace with the exact URL you want to check).

- If the page appears in the results, it’s indexed.

- If you see a message like “Your search – site:[URL] – did not match any documents,” the page is likely not indexed yet (or was just indexed and hasn’t fully appeared).

2. Using the Google Search Console Indexing Report

For a more detailed view, use the reports within Google Search Console:

- Log into GSC and select your property.

- Navigate to the Pages report under the Indexing section in the left-hand menu.

- This report provides a summary of your site’s indexing status, categorizing pages into:

- Not indexed: Pages Google knows about but hasn’t indexed. This section lists reasons why (e.g., ‘Excluded by noindex tag’, ‘Page with redirect’, ‘Crawled – currently not indexed’, ‘Discovered – currently not indexed’, ‘Not found (404)’). Understanding these reasons is key for troubleshooting.

- Indexed: Pages successfully indexed and potentially appearing in Google Search results.

Reviewing the ‘Not indexed’ reasons in this report is the best way to diagnose specific indexing problems.

5 Reasons Google Isn’t Indexing Your Pages

Even after following best practices, you might find some of your pages still aren’t showing up in Google search results. Technical issues or content problems can often prevent successful indexing. Here are five common culprits:

1. Your Website Doesn’t Fully Support Mobile-First Indexing

Google now primarily uses the mobile version of your website for indexing and ranking. This is called mobile-first indexing.

According to Google:

“Mobile-first indexing means Google predominantly uses the mobile version of your content indexing and ranking.”

This means it’s crucial that the content and structure on your mobile site match your desktop site, especially for important information. If key content, links, or features are visible on desktop but hidden or missing on mobile, Google might struggle to index your site correctly or fully understand its value.

(As confirmed by Google’s John Mueller, significant differences between mobile and desktop versions can hinder the mobile-first indexing process).

Solution: Use Google’s Mobile-Friendly Test and review your site on various mobile devices. Ensure navigation, content, and functionality are consistent and accessible across both desktop and mobile versions.

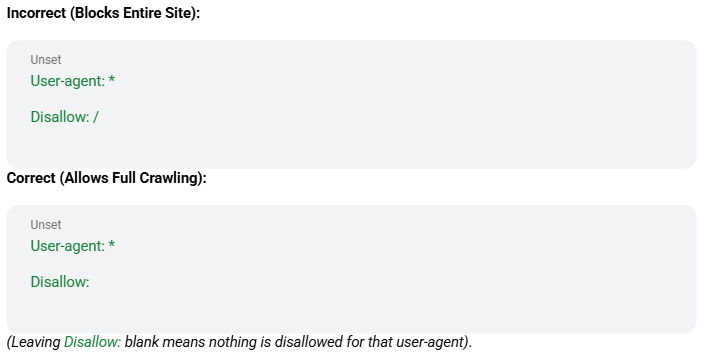

2. Issues with Your Robots.txt File

The robots.txt file, located in your website’s root directory (e.g., yourdomain.com/robots.txt), tells search engine crawlers which parts of your site they can or cannot access. It’s a powerful file, and a small mistake can have big consequences.

For instance, accidentally including Disallow: / under User-agent: * (which means “for all bots”) tells all search engines not to crawl any pages on your site!

Solution: Carefully review your robots.txt file. Ensure you aren’t unintentionally blocking important pages or the entire site. Use Google Search Console’s robots.txt Tester tool. If you’re not comfortable editing this file, it’s highly recommended to consult an SEO specialist. You can still use noindex tags on individual pages if you need to prevent specific ones from being indexed without blocking crawling entirely.

3. Problems with Page Redirects

Redirects guide users and search engines from one URL to another. While necessary sometimes (e.g., after changing a page URL), incorrect implementation or excessive redirect chains can confuse crawlers and hinder indexing. Common reasons for using redirects include:

- Changing domain names

- Updating URL structures (permalinks)

- Fixing typos in URLs

- Moving from HTTP to HTTPS

Think of redirects like mail forwarding:

- 301 Redirect (Permanent): This is like filing a permanent change of address. It tells search engines the page has moved permanently to a new location. This is usually the best option for SEO as it passes link value (or ‘link equity’) to the new URL.

- 302 Redirect (Temporary): This is like a temporary forwarding order. It indicates the move is not permanent, and the original URL might be used again. Useful for A/B testing or temporary promotions, but generally not ideal for permanently moved content. (Google does state that 302s can pass link value, but 301 is clearer for permanent changes).

- (307 Redirect: Also a temporary redirect, similar to 302, but used in more specific technical situations related to HTTP methods.)

Solution: Use 301 redirects for any content that has permanently moved. Minimize redirect chains (Page A -> Page B -> Page C). Use tools like Screaming Frog or online redirect checkers to find and fix redirect issues or broken links (404 errors).

4. All Domain Variations Aren’t Verified in Search Console

Your website might be accessible through multiple URLs (e.g., http://example.com, https://example.com, http://www.example.com, https://www.example.com). Google sees these as potentially different sites. If you also use subdomains (like blog.example.com), those are separate too.

If you haven’t verified ownership of all relevant versions in Google Search Console, Google might not crawl or index content across all variations effectively.

Solution: The easiest approach is to create and verify a Domain Property in Google Search Console. This covers all variations (http/https, www/non-www) and subdomains under that domain automatically. If using the older URL-prefix properties, ensure you have added and verified all major versions, including your preferred canonical version (usually https:// with or without www).

5. Poor or “Thin” Content Quality

While not strictly an indexing block, Google aims to index and rank high-quality, helpful content. If your pages have very little content, duplicate content from other sites, or content that doesn’t provide real value to users, Google may crawl them but choose not to index them prominently, or rank them very low. Google often refers to low-value pages as “thin content.”

Google prioritizes content demonstrating E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness). Pages lacking substance don’t meet user expectations and are less likely to earn visibility.

Solution: Focus on creating unique, substantial, and valuable content that thoroughly answers user questions or meets their needs. Instead of focusing purely on word count, aim for depth, accuracy, originality, and a positive user experience. Regularly review and improve existing content to ensure it remains relevant and helpful.

Making Google Crawl Work For You

Understanding the Google Crawl process is key to improving your website’s SEO performance. By ensuring your site is technically sound, regularly updated with valuable content, and easy for Googlebot to navigate, you encourage more frequent crawls. This leads to better indexing, increased visibility in search results, and ultimately, more potential customers finding your business.

SEO is an ongoing effort that requires consistent attention and expertise. If you’re a busy small business owner who wants to ensure your website is effectively crawled, indexed, and optimized to attract more customers—without having to become an SEO expert yourself—we can help.

At Boostability,, we specialize in data-driven SEO strategies tailored for businesses like yours. We handle the technical complexities, content optimization, and authority building so you can focus on running your business.

Ready to elevate your online presence and increase customer acquisition?

➡️ Request Your Complimentary SEO Consultation Today!

➡️ Review Our Affordable SEO Service Packages

➡️ Examine Our Small Business SEO Success Stories

Original Content created last Aug 17, 2022, updates made last May 17, 2025

Awesome article! The two things that I want to work on are adding fresh content more often and earning more inbound dofollow links. One question I have had is if Google regards comments as fresh content. If so, in theory, the more interaction an article gets by people leaving comments, the more often Google will crawl the site. Anyone know for sure if comments are perceived by Google as fresh content worthy of more frequent crawling?

@disqus_BkikKRpjfe:disqus I don’t actually have an answer, but that is a really good question that I wouldn’t have even thought of – I want to know too now!

That is a great question. It depends how the site is built out and how the commenting system works. For example, if your comments are built out as a plugin, that means they’re also most likely built out as an iFrame within the page and not just a of the page. Therefore, your iframe is technically getting credit for new, engaging content every time a comment is left. However, Google gets smarter and smarter in how they crawl a page. At this point, I don’t know that they are doing a perfect job of linking iFrame content in as credit toward the page the iFrame is in because they’d have to program it extensively in order to give credit only if the iFrame site is owned by the same site where the iFrame exists. Otherwise, anyone could add an iFrame of these comments, for example, to their site and receive credit. However, they do easily track and understand visits, repeating visits, unique visits, bounce rate, and all those tasty Google Analytics numbers which translate to quite the SEO boosting treat! So much of how relevancy is defined comes down to how the site is surfed. Google has shifted to trusting the user’s desire and intent rather than trying to derive relevancy from the code on the page. Obviously, if people are coming back to the site often, that is more important than the number of unique words presented on a page and the percentage of words that may be considered “keywords,” for example. Does that make sense @disqus_BkikKRpjfe:disqus ? And answer your curiosity too @jamisonmichaelfurr:disqus ?

Yes! thanks so much @cazbevan:disqus 🙂

hmmm about: “Google visits them an average of 17 times a day.” are you sure about that?

Thought that it more like an average of 17 pages per day (over last 90day) – metric is “Pages crawled per day”.

Looking at the crawl it shows that google comes in almost every day (for a short time) grabs a number of pages and comes back the next day to grab some more.

Does not mean all the other metrics are updated daily as they only seem to change 1 or 2 times a week. (eg. index status, links to your website..)

go4it,

Rene

As for fresh content, does changing the home page with new copy or layout count? Does Google Blogger count as fresh content, if that’s not part of your website pages? Is changing copy on inner pages a good idea or waste of time. Seems that fresh content would only totally new pages or am I wrong?

These are such great questions!

Updating content across your site does count as new, updated, relevant content. However, you also don’t want to be updating all the time or changing what you have too often. Regular updates are expected – something like a one time per year overhaul of your website to make sure all the of the content remains relevant and no links are broken. Updating new content to the site can be done by adding new, relevant questions, and updating pages that are expected to be updated more often like testimonials, case studies, FAQs pages, and blogs.

As for a blogger blog, although this is owned by Google unless you are hosting the blog directly on the same server that your website exists, it doesn’t really count as updates to your site so much as Google seeing it as another site that is linking back to your site. Does that make sense? To get the best results from blog updates, you’ll want to use a service that will take each new blog post and publish it to a given folder within the same root folder where your site exists with your web host.

Does that answer all your questions?

Great article Jake! This is quite a common question among clients and you hit the nail on the head with your answer.

What advice do you generally give when being asked this question?

Good job Jake! this is a helpful article and like you mentioned that we should have fresh content if we want to Google to crawl the website more frequently. You mention that adding a blog will be beneficial to always have fresh content on the website but there are some cases that client’s wont do it, so my guess my questions is Do you recommend to change the content on the pages on the website? if so, How often would you change the content?

I thought my site was being crawled pretty regular… and perhaps it is… tho things seem to take a while to trickle thru google… that is… things don’t always happen right away… even after a definite google event…

You can see how many pages Google, Bing, and Yahoo are aware of with this free app http://www.FreeWebsiteScore.com

What about using the Fetch As Google feature? Isn’t that the simplest way to make Google crawl your webpages?

Fetch as Google does allow your website to be shown as Google sees it. You can ask Google to crawl your website in Google Search Console. However, these features do not guarantee listing your entire website as potential search items. The only way to ensure your site is mapped correctly in Google’s eyes is to give them a specific XML file to crawl. This tells Google more than it can see just from following links on your site.